The surge in AI-generated content isn’t just flooding the internet with fakes — it’s making the public skeptical of real evidence, too. As companies discover that even genuine proof may not be enough to counter false accusations, Joshua Tucker and Paul Connolly of Kroll and George Vlasto of Resolver offer strategies for maintaining corporate credibility in the age of generative AI.

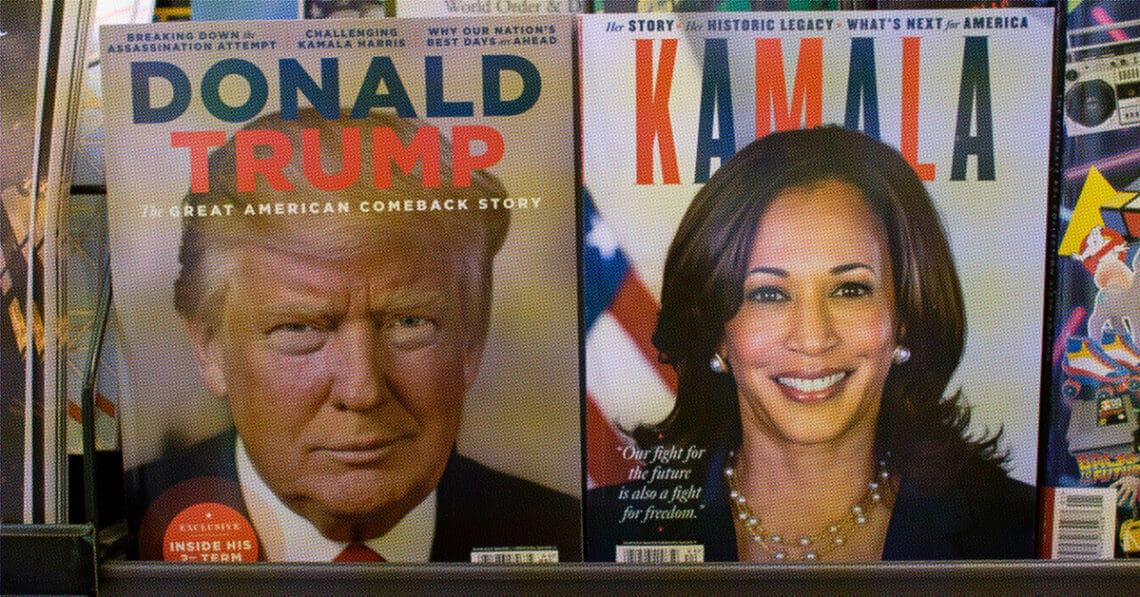

In 2024, a year proclaimed as the “Year of the Election,” voters in countries representing over half the world’s population headed to the polls. This massive electoral wave coincided with the rising prominence of generative AI (GenAI), sparking debates about its potential impact on election integrity and public perception. Businesses, just like political players, are also facing a new landscape where GenAI can be both a risk and an opportunity.

GenAI’s ability to produce highly sophisticated and convincing content at a fraction of the previous cost has raised fears that it could amplify misinformation. The dissemination of fake audio, images and text could reshape how voters perceive candidates and parties.

Businesses, too, face challenges in managing their reputations and navigating this new terrain of manipulated content. Corporate boards need to consider the strategic impact of generative AI. The evidence so far shows that one immediate implication is the denigration of trust in institutional credibility. Corporate leaders rely on the ability to communicate confidently with the market and for their communication to be received with credibility. A growing sense of uncertainty about the veracity of political and business information makes this job more challenging.

The ‘Liar’s Dividend’ & the challenge for truth

Conversations about GenAI surged by 452% in the first eight months of 2024 compared to the same period in 2023, according to a Brandwatch analysis, and many expected 2024 to be the year that deepfakes and other GenAI-driven misinformation would wreak havoc in global elections. However, reality has proved to be more nuanced than these initial concerns.

While deepfake videos and images did gain some traction, it was the more conventional forms of AI-generated content, such as text and audio, that posed the greatest challenges. In regions like the U.S., Europe, and India, AI-generated text and audio were harder to detect, more believable and cheaper to produce than deepfake images and videos.

Businesses will be impacted differently by GenAI depending on their market positioning and exposure. For technology companies, a key concern is misuse of their products to create and disseminate problematic synthetic content or the hosting and dissemination of such content on their platforms.

Public scrutiny and regulatory attention will likely intensify on technology offerings that enable the creation and distribution of synthetic content deemed to be politically, socially or financially damaging. Financial institutions need to be mindful of the market impact of synthetic content. GenAI content that signals a substantial change in fiscal or trade policy from a government leader or election candidate, for example, could have a destabilizing effect on financial markets. For all businesses operating with a degree of public exposure, GenAI content risks reputational damage either because of misleading linkages created with a political agenda or direct targeting of a particular firm with synthetic content in relation to their positioning around controversial political or geopolitical issues.

One of the significant concerns that emerged with GenAI is what has been coined the “Liar’s Dividend,” a reference to the increasing difficulty in convincing people of the truth as the prevalence of fake content grows. As more fake content circulates, individuals find it easier to dismiss even genuine content as fabricated, undermining trust in media and information sources.

In politically polarized countries like the U.S., the Liar’s Dividend has resulted in a scenario where politicians and their supporters struggle to agree on basic facts.

For businesses, this phenomenon also poses serious risks. If a company faces accusations, even presenting real evidence to refute them might not be enough to convince the public that the claims are false. As people become more skeptical of all content, it becomes harder for companies to manage their reputations effectively.

SEC’s Quiet AI Revolution

As artificial intelligence reshapes the business landscape, the SEC is gearing up for a new era of oversight. With a handful of cases already on the books and warnings from top officials, the message is clear: AI isn't just disrupting industries — it's disrupting regulatory enforcement.

Read moreDetailsWhat have we learned so far?

Despite early concerns, 2024 has not yet seen the dramatic escalation of GenAI manipulation in elections that many feared. Several factors have contributed to this:

- Public awareness: The public’s ability to detect and call out GenAI-generated content has improved significantly. Regulators, fact-checking organizations and mainstream media have been proactive in flagging misleading content, contributing to a reduction in its impact.

- Regulatory readiness: Many countries have introduced regulations to address the misuse of GenAI in elections. Media outlets and social media platforms have also adopted stricter policies to combat misinformation, reducing the spread of AI-manipulated content.

- Quality limitations: The production quality of some GenAI-generated content has not met the high expectations that many commentators had feared. This has made it easier to identify and call out fake content before it can go viral.

However, there have still been notable instances of GenAI manipulation during the 2024 election cycle:

- France: Deepfake videos of Marine Le Pen and her niece Marion Maréchal circulated on social media, leading to significant public debate before being revealed as fake.

- India: GenAI-generated content was used to stir sectarian tensions and undermine the integrity of the electoral process.

- U.S.: There were instances of GenAI being used to create fake audio clips mimicking Joe Biden and Kamala Harris, causing confusion among voters. One political consultant involved in a GenAI-based robocall scheme now faces criminal charges.

For businesses, the lessons from political GenAI misuse are clear: The Liar’s Dividend is a real threat, and companies must be prepared to counter misinformation and protect their reputations. As more people become aware of how easily content can be manipulated, they are increasingly skeptical of what they see and hear. For businesses, this can make managing crises, responding to accusations and protecting brand credibility even more challenging.

At the same time, proving a negative — something did not happen — has always been difficult. In a world where GenAI can be used to create false evidence, this challenge is magnified. Companies need to anticipate this by building robust crisis management plans and communication strategies.

For boards and corporate leaders, readiness is key

Although the new information environment is daunting and fast-changing, there are actions that boards and corporate leaders can take to prepare themselves and their organizations. Situational awareness is key: understanding emerging trends, learning from the experience of others and early sight of direct threats to a business’ interests are all important.

Monitoring the digital environment around the business is essential — you want to be the first to know of a potential issue. Forewarned is forearmed. Businesses could also consider using technology (such as content authentication aligned with the C2PA) to watermark their official communications to ensure credibility and limit the scope for impersonation. Most importantly though, thought and planning should be given to how the organization would deal with a problematic issue — even if artificially manufactured. Do not underestimate the importance of clarity and speed in responding to synthetic content.

Looking on the bright side

While much of the discussion around GenAI focuses on its negative aspects, there are positive applications as well, especially in political campaigns, which offer lessons for businesses:

- South Korea: AI avatars were used in political campaigns to engage younger voters, showcasing the technology’s potential for personalized and innovative voter interaction.

- India: Deepfake videos of deceased politicians, authorized by their respective parties, were used to connect with voters across generations, demonstrating a creative way to use GenAI in a positive light.

- Pakistan: The Pakistan Tehreek-e-Insaf (PTI) party, led by jailed Prime Minister Imran Khan, effectively used an AI-generated victory speech after its surprising electoral win. The video received millions of views and resonated with voters, demonstrating GenAI’s ability to amplify campaign messages in powerful ways.

For businesses, the key takeaway from the 2024 election cycle is the importance of planning for the risks posed by GenAI. While the technology has not yet fundamentally reshaped the information environment, its potential to do so remains. Companies must be proactive in addressing the risks posed by AI-generated misinformation and developing strategies to separate truth from falsehood.

At the same time, businesses should also explore the positive uses of GenAI to engage with their audiences in creative ways, much like political campaigns have done. As the technology evolves, firms that are able to harness its potential while mitigating its risks will be better positioned to navigate the complexities of the modern information landscape.