The use of AI is projected to grow exponentially in the near future. James Bone discusses what this means for internal audit and outlines the key elements of an AI audit framework.

Artificial intelligence has become a national strategic imperative for countries as diverse as China, Russia, the U.K., France, Canada and 13 other nations.[1],[2],[3],[4] In February 2019, President Trump signed an Executive Order (EO 13859) to ensure the U.S. maintains leadership in artificial intelligence and importantly addresses gaps in standards for AI to promote and protect AI technology and innovation.[5]

The market for artificial intelligence software automation is expected to grow substantially in the next five years.[6] Estimates are that the use of artificial intelligence will grow from approximately $10 billion in 2019 to $125 billion by 2025. As artificial intelligence grows in importance and use, the role of internal auditors will have to evolve in lockstep to address a variety of new challenges that have yet to be fully contemplated.

In anticipation of this growth, standards organizations such as American National Standards (ISO/IEC JTC 1/SC 42), NIST (EO 13859) and the U.K.’s Information Commissioner’s Office (ICO) have begun the development of frameworks and standards for artificial intelligence. The standards development process will take time, with different standards setters evolving along specific areas of focus, such as data privacy, ethical use or the technical design of AI systems. Surprisingly, AICPA, the standards setter for public accounting firms, and the Institute for Internal Auditors (IIA) have not yet developed formal professional standards to guide auditors in the use and audit of artificial intelligence systems, leaving auditors with little guidance on critical issues related to data privacy, ethical standards in AI audits and audit risks during the implementation and output of AI systems.[7],[8],[9] In addition, proposals from certified public accountants have advocated for formal redress of risks and ethical standards, including algorithmic bias, data management and privacy issues in audits.[10]

To be fair, both organizations have written whitepapers on the topic; however, the articles that exist are guidance-based, open to interpretation and do not ensure that critical protections are in place given the potential for “black box” results that lack traceability and accountability in the event of failure or fraud. As a result, audit executives will be required to develop their own frameworks for auditing artificial intelligence systems. Therefore, now is the time to get up to speed on standards development initiatives to prepare.

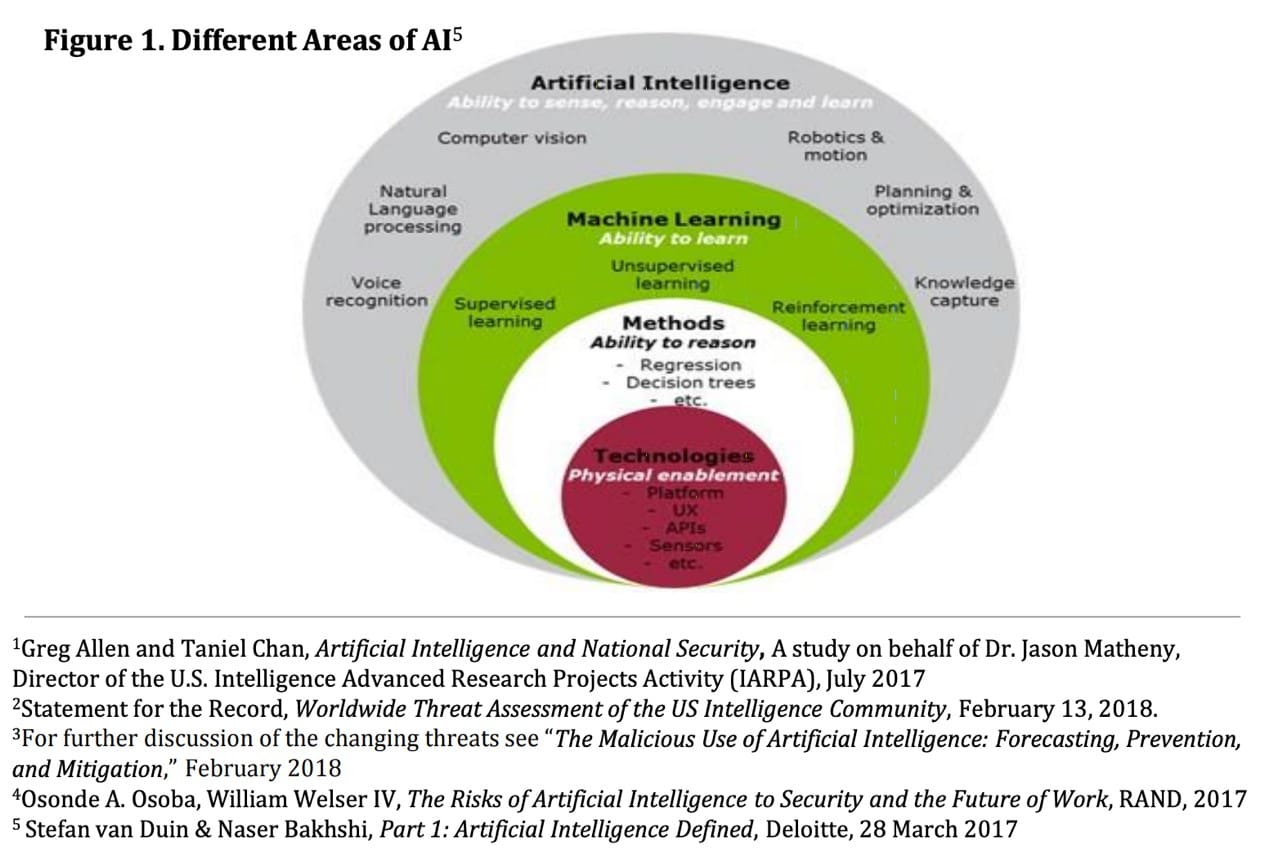

Artificial intelligence has become a buzzword used to describe even simple automation, but the differences are important to understand. Each level of automation plays a valuable role but performs different uses for each type. Robotic process automation (RPA) is the lowest level of automation.[11] RPA follows strict rules and, when programmed properly, executes repetitive processes such as automating accounting workflows, performing data collection and automatically transferring information without human interaction.[12] Organizations have begun to combine one or more levels of intelligent automation to achieve higher performance and operational efficiency. (See Figure 1.)

AI will require new oversight models, such as human/machine collaboration in decision-making processes typically reserved for management requiring clear ground rules of engagement between audit and management. Artificial intelligence may become the most disruptive technological development to date creating new opportunities and risks in every aspect of business and life. AI also represents opportunities for internal audit to provide leadership in audit assurance as well by educating business leaders on the safe use and implementation of AI systems.

AI will require new oversight models, such as human/machine collaboration in decision-making processes typically reserved for management requiring clear ground rules of engagement between audit and management. Artificial intelligence may become the most disruptive technological development to date creating new opportunities and risks in every aspect of business and life. AI also represents opportunities for internal audit to provide leadership in audit assurance as well by educating business leaders on the safe use and implementation of AI systems.

There should not be a “one size fits all” audit framework for artificial intelligence, nor should any standard remain static given the rapid pace of change in technology. With that said, there are common elements to be considered in preparing for an artificial intelligence engagement through the development of an AI ecosystem that captures key risk areas of focus.

Considerations for Planning an Artificial Intelligence Engagement

The key elements of an AI audit framework may include but are not inclusive of all factors for consideration:

The 6 Elements of an AI Ecosystem

- Artificial intelligence ethics and governance models

- Formal standards and procedures for the implementation of artificial intelligence engagements

- Data and model management, governance and privacy

- Understanding the human-machine integration, interactions, decision-support and outcome

- Third-party AI vendor management

- Cybersecurity vulnerability, risk management and business continuity

These represent a framework for internal audit to consider as a supplement to an audit engagement of artificial intelligence. The six elements are not listed in a sequential order of importance; however, until professional audit standards and procedures are developed, each should be considered in the risk assessment phase of audit planning. In the January 2017 updated International Standards for the Professional Practice of Internal Auditing (Standards) section 2010.A1, A2 and C1 are provided as guidance for establishing a “risk-based” audit plan.[13] Chief Audit Executives may need to re-examine risks across the enterprise as a result of implementing artificial intelligence.

Artificial intelligence represents a novel risk, meaning there may not exist historical observations or risk data from which auditors can draw an inference of the scope of risks from senior management or the board. Risk assessments of each of the six elements should be considered in the context of how AI will be applied. Artificial intelligence has already been applied in diverse industries, each with its own inherent risks.[14] The integration of human-machine interactions where AI decisions are relied upon or used in conjunction with human actors represents dynamic risks that require higher levels of attention. The Boeing 737 MAX is one example where over-reliance on AI and an underestimation of risks can result in catastrophic failure – even in industries, like aerospace, where AI has a history of use assisting pilot performance.

Audit executives should work with senior executives and the board to establish ethical standards and governance models for the use of artificial intelligence. Artificial intelligence will require clarity on data privacy, data governance, vendor management, human resources, compliance, cybersecurity and risk management functions and policy. Cross-functional teams of oversight and business leaders may be required to establish new operating models from which audit assurance can be formally established for each impacted area in an organization. For example, if AI is used in patient diagnoses, physicians, nurses and others will be needed to measure improvements or discrepancies in diagnostic accuracy to evaluate new medical interventions for trust models of patient care.[15] Subjective risk assessments will be insufficient in an AI world, where the risks may be exponential in reputational damage and threaten the survival of the firm.

Getting Started

Now is the time to get up to speed!

Each organization will have to make substantial structural changes in anticipation of AI implementation, AI audits and planning for auditing the key outputs of AI systems. The proposed AI ecosystem is an informal checklist to prepare for the structural changes to audit’s role and supporting AI projects or planning for an engagement of intelligent systems. No two AI projects are exactly alike; everyone is learning as they go, so don’t worry about experience at this point.

AI is not a traditional engagement, but many of the existing IT standards cover the minimum bases. Unfortunately, factors related to corporate culture and intended uses of AI will require engagement at the enterprise level to build a sustainable AI audit practice. This is an exciting time for audit executives to play a leadership role in providing assurance, even if more formal guidance and professional standards take time to develop.

In the meantime, new guidance is being developed out of a collective process by a consortium of countries to leverage existing standards and build new ones to provide consistency in assurance.

[1] The Jamestown Foundation, Russia Adopts National Strategy for Development of Artificial Intelligence

[2] Betakit, Canada, France Officially Launch Global Initiative to Advance Responsible Use of AI

[3] ISO.org, ISO/IEC JTC 1/SC 42: Artificial Intelligence

[4] NIST, NIST and the Executive Order on Maintaining American Leadership in Artificial Intelligence

[5] Whitehouse.gov, Executive Order on AI

[6] Omdia, Artificial Intelligence Market Forecasts

[7] Harvard Law School Forum on Corporate Governance, Emerging Technologies, Risk, and the Auditor’s Focus

[8] The Financial Brand, The Rise of Machine Learning and the Risks of AI-Powered Algorithms

[9] Business Today, Robotic process automation failure rate is 30-50%, says EXL CEO Rohit Kapoor

[10] PICPA, Accounting AI and Machine L: Applications and Challenges

[11] Hackernoon, Why Robotic Process Automation Is Not Artificial Intelligence

[12] The CPA Journal, How Robotic Process Automation Is Transforming Accounting and Auditing

[13] The Institute of Internal Auditors, International Standards for the Professional Practice of Internal Auditing (Standards) 2017

[14] Wikipedia, Applications of artificial intelligence

[15] BMJ Journals, Artificial intelligence in healthcare: past, present and future

James Bone’s career has spanned 29 years of management, financial services and regulatory compliance risk experience with Frito-Lay, Inc., Abbot Labs, Merrill Lynch, and Fidelity Investments. James founded Global Compliance Associates, LLC and TheGRCBlueBook in 2009 to consult with global professional services firms, private equity investors, and risk and compliance professionals seeking insights in governance, risk and compliance (“GRC”) leading practices and best in class vendors.

James Bone’s career has spanned 29 years of management, financial services and regulatory compliance risk experience with Frito-Lay, Inc., Abbot Labs, Merrill Lynch, and Fidelity Investments. James founded Global Compliance Associates, LLC and TheGRCBlueBook in 2009 to consult with global professional services firms, private equity investors, and risk and compliance professionals seeking insights in governance, risk and compliance (“GRC”) leading practices and best in class vendors.